Need help with your Django project?

Check our django servicesWe believe that all builds should be really fast because:

- Human time is way more valuable than compute time. That is why developers should not waste time waiting for builds to pass.

- Releasing software frequently is important and slow builds should not delay releases.

- Going for a coffee until the build is done takes you out of the flow.

If you want to get your Django project up and running on GitHub Actions. Check our previous article about GitHub Actions with Django and Postgres.

Initial setup

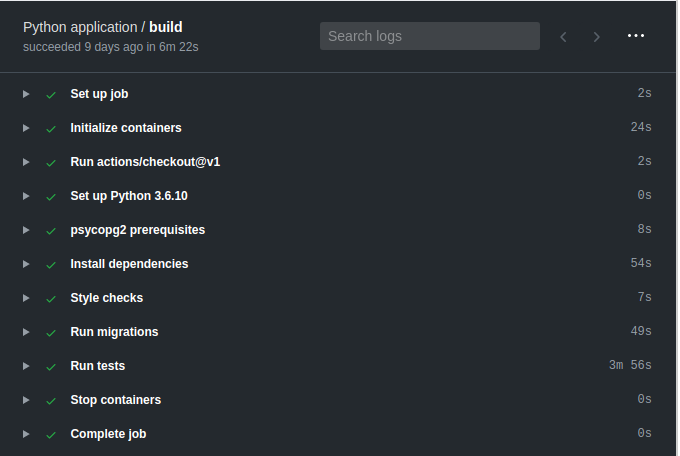

In this article, we will optimize a Django build that managed to sneak over the 5 min mark. In this particular case, we are using py.test to run our tests and Github Actions as CI. Nevertheless, all the points in this article are applicable to other test frameworks and continuous integration services as well.

We are starting with a standard Django API backend with ~1100 tests most of which hit a PostgreSQL database. The tests solely need 3min 30sec to pass locally.

$ time py.test

py.test 148.41s user 4.03s system 72% cpu 3:31.69 totalAnd when you combine that with all the other build steps it adds up to 6min 22sec.

Create а settings file for your tests

It is a great idea to add a separate settings file for your test settings. That way, you can set some Django settings only while running your tests. If you're using Django Cookiecutter you are probably already familiar with this concept.

$ touch config/settings/test.pyfrom .base import * # Load everything from the base settingsThen just tell py.test to load this settings file on every run by pasting this to your pytest.ini

[pytest]

DJANGO_SETTINGS_MODULE=config.settings.testChange Django password hashers

To test different scenarios we create users in our tests. Creating a user in Django by default is slow because of the secure algorithm that is used to hash the password.

In your test.py you can set your password hasher to something faster like MD5.

PASSWORD_HASHERS = ['django.contrib.auth.hashers.MD5PasswordHasher']Before:

$ time py.test

py.test 148.41s user 4.03s system 72% cpu 3:31.69 totalAfter:

$ time py.test

py.test 142.21s user 2.63s system 71% cpu 3:25.96 totalIt is not a huge improvement but it is almost free.

Turn off Django debug mode

Django is slower in debug mode. So tests are slower if your Django is in debug mode too.

Before:

time py.test

py.test 142.21s user 2.63s system 71% cpu 3:25.96 totalAfter:

time py.test

py.test 137.21s user 2.63s system 71% cpu 3:15.96 totalRun your tests with --nomigrations

When you run your tests, the test runner gets all your migrations and tries to apply them one by one in order to create a test database. In projects with a lot of migrations, that may be extremely time-consuming.

--nomigrationsGets your models and constructs and creates the database directly from them. In our case that saves a minute on every build.

time py.test

py.test 137.21s user 2.63s system 71% cpu 3:15.96 totaltime py.test --nomigrations

py.test --nomigrations 85.86s user 1.96s system 68% cpu 2:08.22 totalKeep in mind that by skipping the migrations, you're not checking for migration conflicts. They often appear if you are in a larger team. So you should run your migrations in a separate parallel build step.

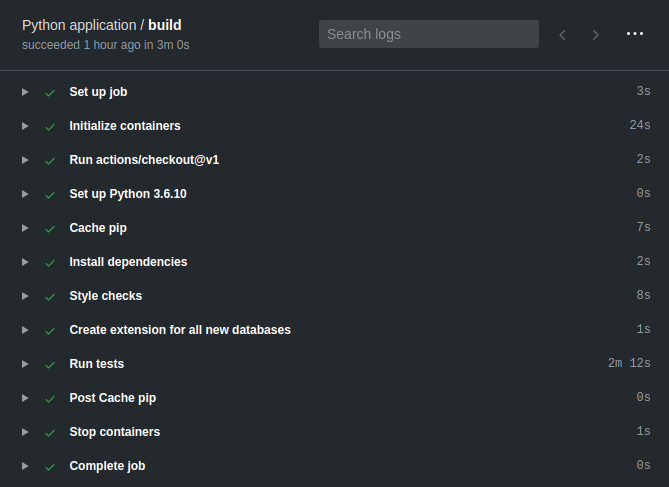

Cache dependencies on CI

Installing dependencies on a CI always takes time, even though most of the CI services have cache layers for the most common package managers. GitHub Actions recommends caching the ~/.cache/pip directory, but even with cache, pip makes HTTP requests to check for newer versions of every package. This may take more than a minute (depending on the number of dependencies you have)

A nice hack is to cache the whole python environment. That will reduce the time needed for package installation to a couple of seconds.

In this example with GitHub Actions, we use the content of the local.txt and the base.txt

steps:

- uses: actions/checkout@v1

- name: Set up Python 3.6.10

uses: actions/setup-python@v1

with:

python-version: 3.6.10

- name: Cache pip

uses: actions/cache@v1

with:

path: /opt/hostedtoolcache/Python/3.6.10/x64/ # This path is specific to Ubuntu

# If the requirements files change, the cache will not hit.

key: python-${{ hashFiles('requirements/local.txt') }}-${{ hashFiles('requirements/base.txt') }}Parallelism with pytest-xdist

py.test normally creates a test database and then executes the tests one by one. In many cases, this does not really utilize the maximum potential of the hardware.

Using pytest-xdist you can run your tests in parallel. For example, if you have 4 available cores you can run py.test --nomigrations -n 4. This will create 4 test databases, separate your tests in 4 groups, and run them in parallel.

Using -n will set the number of processes to equal the number of cores on the machine.

$ time py.test --nomigrations -n auto

py.test --nomigrations -n auto 177.71s user 5.62s system 175% cpu 1:44.76 totalYou can achieve the same using python manage.py test --parallel

Final result

After all that effort, we managed to reduce the build time from 6:22 to 3:00 which is more than 50% ⬇️

If that is not enough then get more powerful hardware

In many cases, you just have a lot to test. Some tests are slower by design and you can't just skip them. If your build utilizes the full potential of your hardware and the tests are still slower than you'd like - it is just time for better hardware.

Out of the box, GitHub Actions gives you 2 cores and 7GB of RAM. This is more than enough to start, but when the project gets big and you have a lot of tests in your suite you may consider hosting your own GitHub Actions runner.

Running a system with more cores will significantly boost the build time. Most of the CI services offer either some kind of self-hosted solution or running on the hardware of your choice.

At the time of writing, GitHub Actions doesn't have support for choosing different hardware to run your tests on. In their forum, they promised to include it at some point, but if you need more power right away, you'll have to host some workers on your own.

And finally a couple of bad practices.

Don't use SQLite to run your tests faster.

There are many articles on the web that suggest running with an in-memory SQLite server to save time during builds. We don't recommend doing this. In order to test that your code would work properly on production, you need to test with exactly the same database while you are running tests. Even the version should be the same.

Many times your codebase depends on specific PostgreSQL features that are not implemented in SQLite or even worst they are implemented but they work differently.

Don't mock every database call.

Mocking is great for testing small units but sometimes you need to write tests that test the whole picture. Although hitting the database is slow you need to do it to ensure that your codes work with the database correctly.

Yes, the Django ORM is tested and it works fine but are you using it correctly?

Resources

Need help with your Django project?

Check our django services